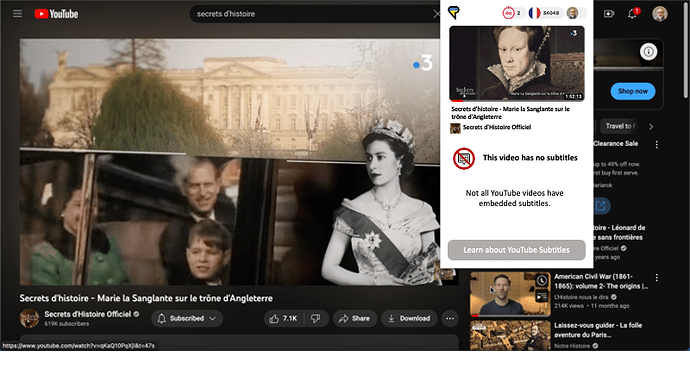

I can’t import videos from YouTube for learning.I hope it gets fixed soon.

I understand now, if the original video doesn’t upload subtitles to YouTube, they can’t be imported into LingQ.

That is correct, yes. You can only import videos with available subtitles.

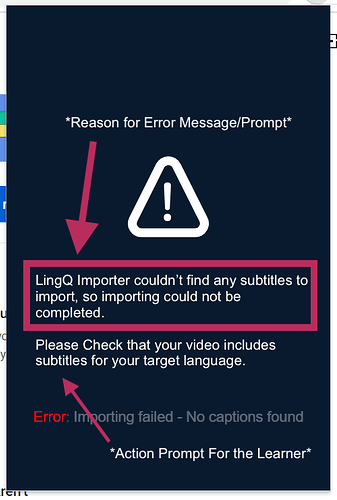

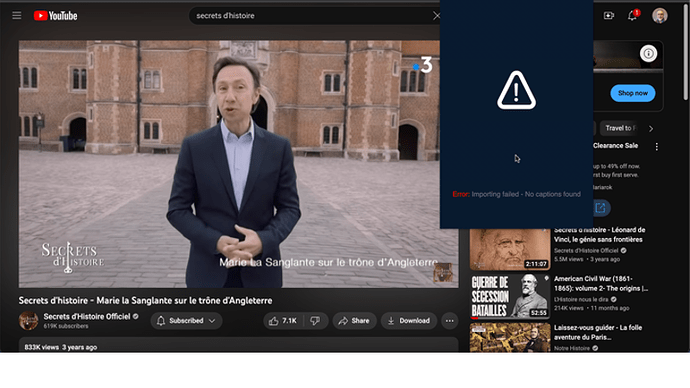

The error message display area is huge yet conveys minimal info. Perhaps how the more detailed explanation is phrased here is good draft text for me helpful error messaging. Also, perhaps in this scenario a different, even more subtle, icon could be used.

Also, maybe a good call to action is possible, beyond the “you have reached a deadend” style of error messaging.

Given the design choices here, you can’t really fault the user to perceiving it as an issue needing fixed.

Perhaps the UX could be better here.

@zoran, may I propose the following addition to the Error: Importing failed message?:

Not sure if the action prompt should read:

Please [c]heck that your video includes subtitles for your target language [emphasis added].

or something else like:

Please check that your video includes subtitles.

But, it’s an idea to discribe the problem and the action the learner might be able to take to follow up on it ![]()

It does actually says “No captions found” at the bottom of that popup, but we’ll see what we can do to make it more obvious.

Thank you for the end user engagement and your mockup!

I love LingQ and will invest in the improvement of the platform in quite detailed response here.

Rather than “fixing an error message,” let’s reframe the question to an orientation to where you want to make it as easy, friendly, and delightful as possible.

First, do what you can, when you can, for the sake of the convenience of the user. When the user has clicked on the web extension button in their browser, you have everything you need to determine whether or not the video does have subtitles. Rather than require the user to take a subsequent action to then tell them it failed–when you already have all the info you need that it’s going to fail–do a bit more for them on their behalf. Contemporary experience design standards are much like this and this is actually what more so makes things “obvious” in 2023, where there’s strong intentionality done by the experience designers but the user doesn’t even consciously see it. Don’t even show the [Import] button until you know you can import it. Don’t even show the [Import] button until you can help the language learner decide whether or not they want to engage this content. (The code that pulls from the YouTube API is in the wrong spot in the flow IMO.)

Second, there’s so much more you can usefully do before showing that [Import] button to the user beyond confirming there are subtitles in the video. Analyze the subtitles! Here are some extremely useful things you could do on behalf of us language learners.

-

Confirm the language of the subtitles. You can take the text, give it to ChatGPT or similar and ask it, “What language is the following text in?” If the subtitles of the video match the language that the user is studying at the moment, awesome. If the subtitles of the video match a different language that the user studies (another language on the user’s profile), think how you’d ask them if they’d like to flip over to that language. Then, if the subtitles are of a language that the user has never studied, think about how you’d like to present that inform to the user and ask them what they’d like to do. There are actually four scenarios to handle: 1) subtitles exist and are in the language that the user is studying at the moment, 2) subtitles exist and are in a different language that the user studies, 3) subtitles exist and are in a language that the user hasn’t studied on LingQ, and then finally, 4) subtitles don’t exist. All four really solicit different calls to action.

-

Help the user know if they’d even want to import it and try to interact with the content. Again, you can do this even before you’ve had the user press the [Import] button. They’ve voluntarily engaged the LingQ brand from the separate experience context of YouTube in their browser and you want to provide them as immediately delightful experience as possible. There’s much you can do just from the user doing that first simple click on your web extension’s LingQ logo. Analyze the subtitles to help the LingQ user quickly decide if they want to engage the context. I think the primary two factors are these. 1) Is it at a right level? 2) Is the content of interest? Here, you can build on the foundations you already have with the known vocabulary but in the generative AI era of language learning, you can do substantially more. You can prompt ChatGPT or similar with such as, 1) “on the CEFR Scale from A1 to C2, what is the level of the text?”, as well as 2) “please extract top five keywords of the text” and maybe even “please summarize the text in one sentence.” The manual “Import to” drop-down, while appropriate for the pre-AI era of language learning maybe outliving its utility. Similarly, the manual “Add Tags” drop-down may also be outliving its utility as well. Feel free to check your log files if they have this information, but I’d guess that many users are like me where they don’t bother manually cataloging, especially before even deciding whether or not to engage the content.

Third, make it as visually relevant, inviting, and compelling as possible.

I offer the following screenshots with both LingQ’s as-is and some mock-up that I believe would be INCREDIBLE should you want to do it.

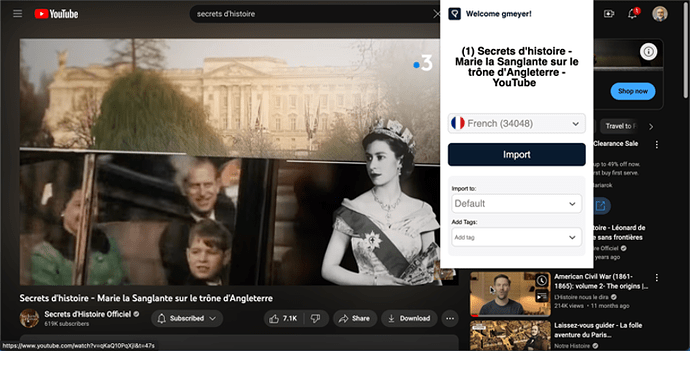

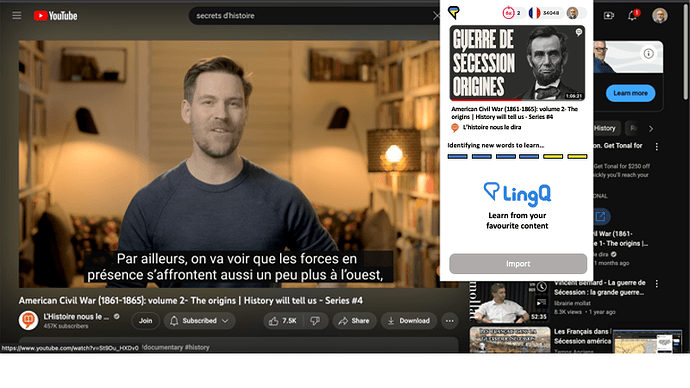

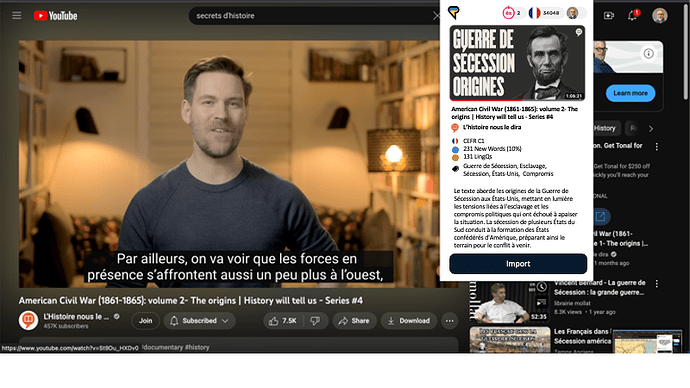

Here is the UX as it exists today when it fails to import because of lack of subtitles in the video.

You ask the user to click the [Import] button.

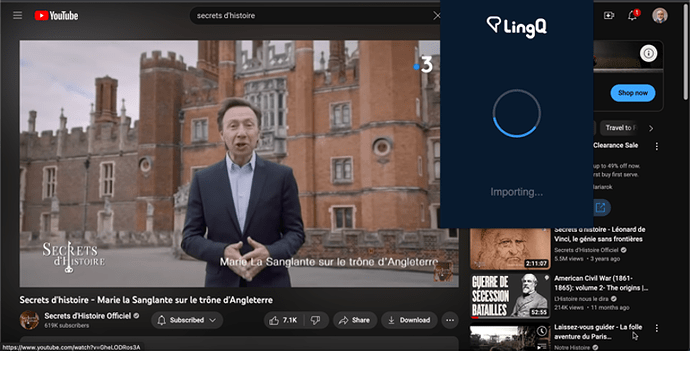

While importing, there’s a spinner.

Then this error message appears giving context clues that the user has done something wrong.

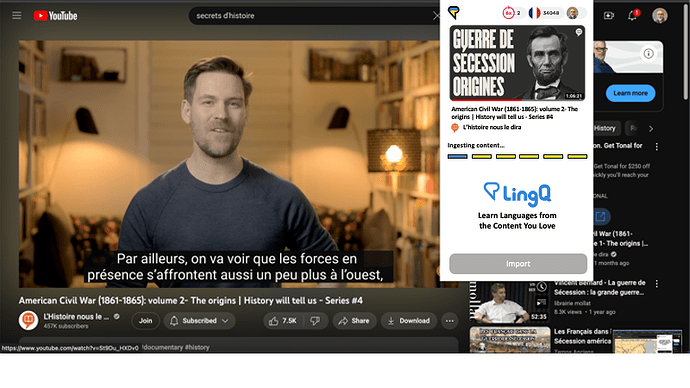

Moving from the as-is, let’s brainstorm on a possible to-be. What if instead, at least when all goes well, you had a user experience like the following.

Upon clicking on the web extension icon in the browser itself, you get started ingesting the content. As the process proceeds you give the user awareness of the progress and you promote the value of your brand. (I pulled the key phrases from LingQ’s home page.)

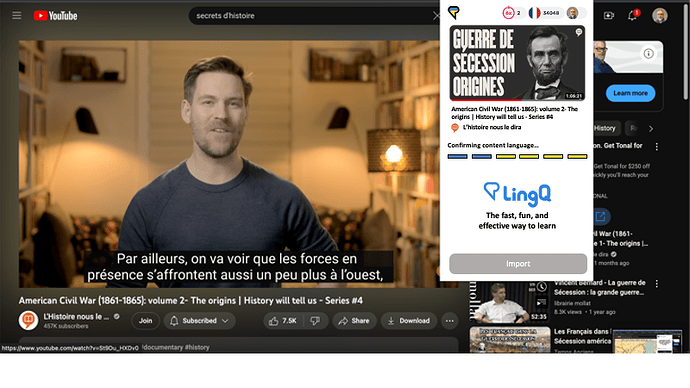

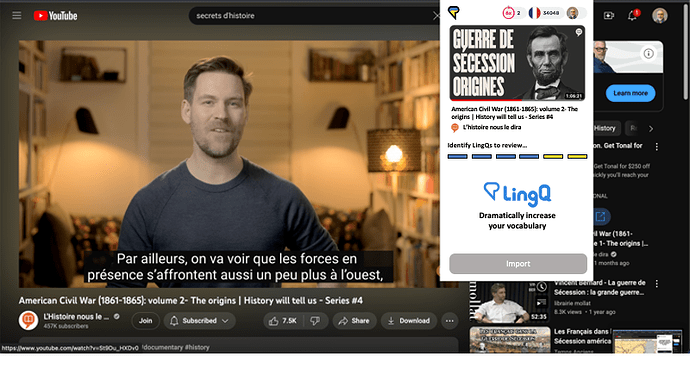

After having fetched the subtitles successfully from YouTube you walk the user through progress and promote LingQ’s incredible value proposition.

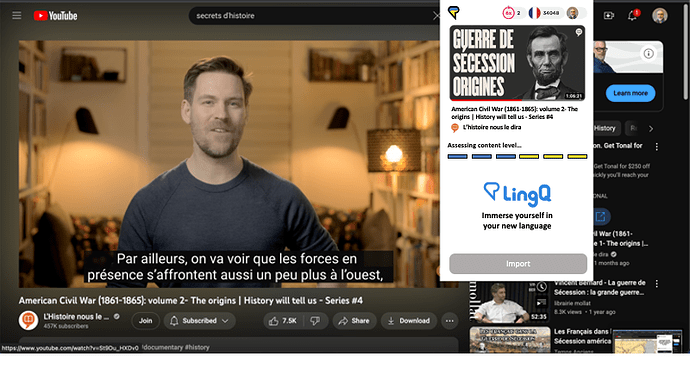

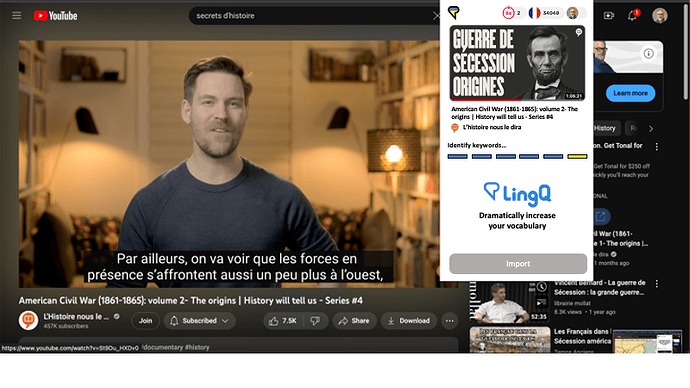

It continues from there as you have integrated generative AI analyze the the subtitle lesson text…

And you also analyze the lesson text vis-a-vis the user’s known vocabulary…

All of this happens even before you’ve bothered the user with a second button click…

You don’t necessarily have to make the status updates directly correspond to what’s happening under the hood (or bonnet).

I’m not sure how many interstitial updates you want to give but you want to show the progress, be engaging and promote your value proposition, creating anticipation to engage the content in order to achieve one’s language-learning goals.

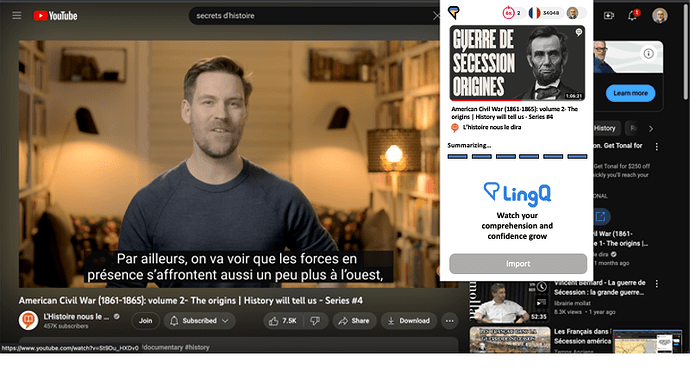

Then, after a single click you’re ready to help the LingQ user quickly assess whether or not the content is worth their time to engage. Is it interesting? Is it at the right level? Give the user what they need to decide before even a second mouse click.

At this point in this example, I see that the content is at a C1 level in French and that there are 10% new words vs. my LingQ vocabulary. This will be a bit tough for me. Maybe I can engage it but I’m going to need to spend time reading the lesson maybe before the video watching/listen and maybe after again as well to really benefit from it. Given that it’s a full hour long, it’s a fairly big investment in study time. Does this all match with the time and motivation and goals that I have right now in how I want to engage comprehensible input?

I can decide all this with one a single mouse click and within a few seconds.

Then, maybe upon clicking the [Import] button, you have another brief, pre-populated screen in the extension where you confirm the tagging and categorization. Or maybe that can be avoided altogether and you just bring them straight into LingQ and they can do the tagging and categorization after the lesson because it’s really only relevant should they want to re-study the lesson a subsequent time.

Now, back to the immediate triggering question of this thread on how to produce a better error message. How about something like this in what would be a better overall UX, IMO:

Note that this happens all after just clicking on the LingQ browser extension’s icon. Note that there are no visual context clues that the user has done something wrong. Note that it’s clear in the wording that the problem is the video’s problem, not LingQ’s problem reinforcing the positive perceptions of your brand. Note that the call to action is to learn more about how to avoid this problem and engage YouTube-based comprehensible input more effectively.

Here’s how to engage YouTube filtering for just videos with subtitles/captions.

Finally, below are excerpts of the ChatGPT prompt and response used to help me with mocking up this idea.

I realize I put a lot of time into mocking this up for you. But… I’ve spent a lot of time on this plaform learning French over the last couple years and I’d really like to learn German here too over the next few years. I spend a lot of time every day on LingQ.

Beginning of ChatGPT Prompt:

I’m learning French and am at an “Advanced I” level. I’m considering studying the following YouTube video’s subtitles as a lesson. Please help me understand if I can successfully engage the text and if I’d be interested in the content.

Please tell me the following:

- Language: The language of the text.

- Level: The approximate complexity of the text based upon assessing its level in the CEFR framework, from A1 to C2.

- Keywords: Five keywords of the text that could be used as metadata tags, quickly revealing the nature of the content. List these five keywords in the same language of the text itself.

- Summary: A simple one-sentence summary of the text. Provide this summary in the same language of the text itself.

The first portion of the extracted YouTube video subtitles to use as the text follow:

(1) American Civil War (1861-1865): volume 1- The origins | History will tell us- Series #4 - YouTube

Aujourd’hui, je vous invite à découvrir ce que d’aucuns

pourraient qualifier de chapitre le plus lourd, le plus terrible,

le plus sanglant de l’histoire des États-Unis d’Amérique : la Guerre de Sécession.

Mieux connue aux États-Unis sous l’appellation de The American Civil War, la « Guerre civile…

ChatGPT Response:

Language: French

Level: Advanced (CEFR C1)

Keywords:

- Guerre de Sécession (Civil War)

- Esclavage (Slavery)

- Sécession (Secession)

- États-Unis (United States)

- Compromis (Compromises)

Summary: Le texte aborde les origines de la Guerre de Sécession aux États-Unis, mettant en lumière les tensions liées à l’esclavage et les compromis politiques qui ont échoué à apaiser la situation. La sécession de plusieurs États du Sud conduit à la formation des États confédérés d’Amérique, préparant ainsi le terrain pour le conflit à venir.

Really cool mock-ups. A lot of this information can be found if it’s marked as an external in the library and imported through that mechanism rather than a wild import. I have suggested subtitle-less videos importing if a librarian has transcribed that video but didn’t get traction.

I was having a look at the LINGQ importer code and your ideas will require a lot of work

I’m all for moving work away from the language learners. Let’s make it as friendly, efficient, and positive as we can for the end users.

Any chance that in the future, it uploads w/o subtitles, and uses whisper to transcribe subtitles (which you already support).

Not sure if something like that is possible, but we’ll see what we can do.

I’m also popping in to express my interest in this idea!

More so to be able to import videos with no captions that have hardcoded/burned-in subtitles.

Many YouTube videos that would be excellent learning materials have both English (my Native language) and Korean (my TL) burned in subtitles but no YouTube/selectable captions.

Thanks for all you do, zoran!

I love the Whisper idea too!

I personally use LingQ mostly outside-in, not inside-out. Here’s what I mean…

Rather than going to LingQ to find content and use its topical categorization and level-based filtering, I start with the Internet and the content itself. I navigate YouTube for things in YouTube. I navigate Netflix for things in Netflix. I start with Google news for aggregated news articles. I start with favorite sites for their content.

If something interests me, I consume the content, like a regular web user in the language. If I’d benefit from engaging it within LingQ rather than from in its native format, I bring it into LingQ.

I think I’m able to find much more interesting content this way. If I look at the “News” category on my LingQ Lessons home page, the first news radio broadcast dates from March of 2021, the second from May of 2020, and the third from May of 2019. I contrast this with what Google News does with “Pour Vous” and its personalization algorithms with the last few days news stories related to my interests.

I see LingQ’s value proposition being a tool that enables one to study anything on the internet. This is in part why I think the web extension is not just a on-the-side secondary utility but a primary enabler of the consumer choice of engaging the LingQ brand. This is also why I think Whisper integration would be awesome!

I just can’t see the LingQ paradigm being able to rest on its laurels too long in the new era of generative AI.

As a language learner, I look forward to what’s ahead!

Burned in subtitles would be quite an interesting process to extract. You would need to look at the whole video frame by frame like an image and OCR the text. Also any decently sized video should be done by a server side process as doing it in the browser would crash a lot of devices. If there is enough demand for it I will write this solution.

Wow! I didn’t even think of this. That’s pretty cool!

I would mainly import them and use them as a chance to transcribe the TL being used in the video and then pop in the human translation myself (transcribing, of course). More manual work for sure, but typing practice can be good, too, lol!

P.S. I know there is a case for downloading the YouTube video myself, then importing, then doing all the transcribing, but I’ve found the downloading part to be more of a headache for me, the downloader I have limits things to 10 minutes in length, so It’s more cumbersome.