First, I’d say I don’t really see reasons for either definitions of words changing or my knowledge of that word’s definition and usage changing with a sidebar’s activation.

A) The definitions of a word are independent of my knowledge of that word.

B) My knowledge of a word is dependent upon that word’s conventional definitions however independent of my usage of any language learning app and its features.

C) The features of the app should be based upon this. Simply, I see only confusion unnecessarily introduced to the language learning experience where in some usage context the word appears as known, with definition, and in other usage it appears as needing to have definition ascribed.

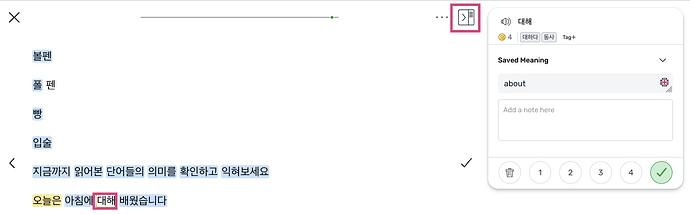

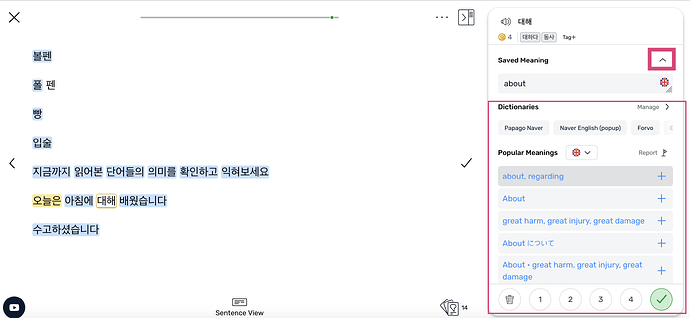

Second, it simply doesn’t work in the sidebar, either.

In addition to fixing the bug, I’m of the strong opinion that vocabulary management in LingQ needs to be substantially rethought in the era of generative AI.

Rather than having manual integration with traditional-method dictionaries and self-management of vocabularies, what I’d really like to see when simply clicking on a word is the response to such as what this ChatGPT prompt demonstrates.

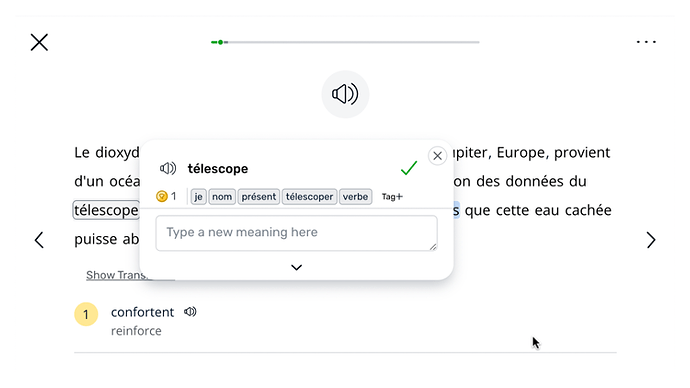

As brief as possible, even possibly expressed as a single word or maybe two or possibly three, what is the English definition of the French word “télescope” as used in the following sentence:

Le dioxyde de carbone détecté sur une des lunes de Jupiter, Europe, provient d’un océan situé sous son épaisse couche de glace, selon des données du télescope spatial James Webb qui confortent les espoirs que cette eau cachée puisse abriter la vie.

ChatGPT responds with:

telescope

Here’s another example…

As brief as possible, even possibly expressed as a single word or maybe two or possibly three, what is the English definition of the French words “Le dioxyde de carbone” as used in the following sentence:

Le dioxyde de carbone détecté sur une des lunes de Jupiter, Europe, provient d’un océan situé sous son épaisse couche de glace, selon des données du télescope spatial James Webb qui confortent les espoirs que cette eau cachée puisse abriter la vie.

ChatGPT responds with:

carbon dioxide

Rather than asking me for my own personal definition of a word (with the “type a new meaning here” prompt) that I seem to have forgotten for a moment, I’d rather see a good, common, contextual definition for me like this.

IMO, more than about anything, LingQ should show me the definition of a word when I click on it.