In this video I discuss some common misconceptions about language and vocabulary acquisition and share my strategy for learning vocabulary in a new language.

Steve is saying that in his experience in mere volume/quantity of content is what he finds to be the biggest determining factor in vocabulary acquisition. This means we should be trying to consume as much content as we can. To do this, I’ve found:

- Listening to the audio while listening increases my reading speed (unless you are already a very advanced reader perhaps)

- Increasing the audio playback speed in turn increases this speed, as @PeterBormann advocates, when you become intermediate and above

- The way LingQ manages definitions (choosing the best definition in a list) really is a massive slowdown. Potential ways of getting around this include: turning on auto-lingQ, using @roosterburton’s extension to auto-lingQ all, or really the best would be using Language Reactor /@roosterburton’s Video Tools so you get most of your definitions from the bilingual translations of the second subtitles instead of dictionary look-ups

- Podcasts, interviews, YouTube ‘talking heads’ are more word dense than TV series, movies, and documentaries (usually by about double, unless you use @roosterburton’s Video Tools and increase the speed of non-speech sections)

I like that he calls Chomsky’s universal grammar theory a myth. Chomsky is an ideologue, rather than a scientist, in my view.

This video is very interesting and obviously he is one of the world’s most experienced and knowledgeable language learners, with a lot to share. However, it raises the question of whether the methods he uses are the best for everyone. Studies of London taxi drivers, who have to pass The Knowledge, whereby they memorise an incredible number of routes, have been shown to have modified brains. In particular the hypocampus, a region involved in learning, is greatly enlarged. I would expect that Steve Kaufmann’s brain has also been modified through his massive experience of language learning. I’m not saying we should ignore his advice, quite the opposite, but it’s something to bear in mind.

My own experience of French and German over the past two years is that:

As Steve Kaufmann indicates, learning individual words with flash cards is in general bad. You don’t learn how to use the word. What preposition does it need? In which contexts can it be used? What are the associated words? It’s akin to learning the parts of a car engine, then being asked to replace the clutch. I started out learning individual words, and it took me a year to learn that he is right.

I do use, following his recommendation, a lot of audio and written input. However, I tend to only partially remember details. Thus, taking a trivial example, I might say J’ai renoncé de le faire instead of the correct J’ai renoncé à le faire. I do this all the while, and the solution I use is to write phrases on flash cards. This is akin to his method of revising a text or audio clip numerous times, but it focuses on specific structures. Perhaps he and others are able to pay more attention when listening to audio.

It’s all very well saying that the natural way is through massive exposure, but for kids language acquisition is existential. I would argue that they pay far more attention compared to an adult, they are able to focus on details better. And they are actively involved in learning. I believe we learn far better when words relate to experiences. Reading a text isn’t the same. I find that Googling a word, and looking at images, helps a lot. The images create stronger associations.

The LingQ translations are often wrong, because they take the words literally. Thus the vulgar Cacher la merde au chat translates to to sweep it under the carpet, and ** Bouffer ta culotte** means to lose your money when for example investing. My solution is to use Google, and read the French language articles that explain the meaning and origin of phrases. That of course has countless side benefits.

When watching a YouTube video with LingQ, the video is tiny, even on my 12.9” iPad, which essentially removes the visual clues and stimuli. That said, I find the feature extremely valuable.

Yes, I’ve come to similar conclusions (apart from the “Listening to the audio while listening” part. You mean “reading while listening”, right? :-)) , but I’d like to add a few other things:

- A major flaw of a “pure” input-oriented approach is probably that it tries to resort to more and more “recognition” operations (in popular wisdom misinterpreted as passive" vocabulary) to tackle “use” operations (so-called “active” vocabulary).

In order for that activation to occur, you’ve got to switch to a “mass” immersion approach (in my experience, ca. 5-10 million words read and ca. 2000-5000 hours of listening to the appropriate content - for closer L2s).

The vast majority of language learners doesn’t (want to) do that because the time investment is too huge. Thus, it’s better to start with “use” operations (speaking, chatting, writing, L1->L2 translations) much earlier.

- The criticism of Chomsky’s Universal Grammar isn’t new (keyword: usage-based linguistics), but a particular twist may be that language learners don’t import content / language forms into their minds, My thesis is rather that they create an ideolect for themselves and then try to make it more and more similar to the language use they observe in social contexts.

In other words, there is neither an in-born “universal grammar” module in us (maybe some basic key distinctions such as “moving / not moving”) nor are there input / output operations where info is exported by senders and imported by receivers (that’s just a technical understanding of the transfer of data projected on human communication processes).

In brief, the whole interplay of psyche (for processing perceptions), consciousness (for processing language forms, language / media, and social communication processes is way more complicated than input-output- / sender-receiver approaches suggest. I don’t see that Krashen, for ex., is able to explain that.

- At the beginning, it’s not a good idea to use “reading while listening or reading alone” (and this means also: LingQ) with distant languages whose writing systems are very different from ours (for Indo-European native speakers, for ex., Asian languages) because this approach is extremely inefficient. See Steve’s experiences in Arabic here: https://www.youtube.com/watch?v=qudKA-Ws_zo

It’s much more efficient and motivating (!) to focus on fluency-first in the oral dimension without resorting to reading / writing. And this is exactly Jeff Brown’s approach (but I’d use just images and comic books): https://www.youtube.com/watch?v=illApgaLgGA

Note: I wonder if AI can be of help here as well…

- For distant L2s, it’s also a good idea to use grammar-light approaches à la Michel Thomas or Language Transfer to get a feel for basic grammar structures. Otherwise, it’s often really hard to understand sentence structures.

I’m extremely surprised that Steve (Kaufmann) didn’t use those strategies, but primarily relied on reading (/ listening) and LingQ. He should have known that his strategies (based on LingQ) wouldn’t work well with Arabic. And then tackling Arabic, Persian, and Turkish almost at the same time… at this point I lost all interest in what Steve has to say…

There is Noam Chomsky as a public intellectual and then there is Noam Chomsky as a leading scientific figure in modern linguistics who is also very influential in analytical philosophy, cognitive science and computer science.

With almost 500k citations on Google Scholar (https://scholar.google.com/citations?user=rbgNVw0AAAAJ) Chomsky is not simply a scientist, but a giant. Nevertheless, it’s fine for people to disagree with him on language, but it’s even better if they know what they’re talking about…

- The way LingQ manages definitions (choosing the best definition in a list) really is a massive slowdown. Potential ways of getting around this include: turning on auto-lingQ, using @roosterburton’s extension to auto-lingQ all, or really the best would be using Language Reactor /@roosterburton’s Video Tools so you get most of your definitions from the bilingual translations of the second subtitles instead of dictionary look-ups

how exactly do you combat this ? I have that problem as well. I need to check every blue word against a dictionary as I have passed the threshold were unknown words have good definitions long ago. Most of the time I only have one definition available that was autogenerated by lingq and is wrong in 50% of the cases. I check these words in globse and copy the best definition. This is of course a time intensive process. AutoLingqing wouldn’t help me here because then I would end up with roughly half of my lingqs beeing wrong.

What can you tell me about the second option ? Language reactor seems to have way more accurate translations but want to continue using lingq.

Your rudeness is uncalled for but not unexpected.

I’m well aware of Chomsky’s reputation and work. A feature, in both his scientific and political work, is the creation of theories, based on how he thinks the world should work, rather than on evidence, with a very strong ability to ignore contradictory research, and alternative ideas. His followers have a reputation for being very aggressive and unpleasant towards other researchers. There is, or was, something of a personality cult around him.

You are clearly not aware that Chomsky is a highly devisive figure. There are many serious linguists who consider that Chomsky is responsible for taking the field down a rabbit hole, and diverting resources. Stephen Pinker is on record as saying that it is not good for the discipline for one figure to be so dominant. Experimental research is and has been for a long while providing substantial evidence against his ideas. Large Language Models provide strong circumstantial evidence against innate language apparatus of the kind he has proposed.

I’ve heard several highly regarded polyglots publicly state that they do not accept Chomsky’s ideas on language acquisition. It is a shame he has never listened to the views of such people. His view that the brain has a so called Language Acquisition Device that shuts down before adulthood may well have harmed second language teaching. I’ve met countless people who disprove that idea. Only two days ago while donating blood, I met a German nurse who spoke beautiful English, and she grew up in East Germany.

As an aside I did my PhD in physics, followed by several post docs in England and Canada so I have some knowledge of academic research. In general science is a collaborative process, and should be grounded in observation and not theory.

“As an aside I did my PhD in physics, followed by several post docs in England and Canada so I have some knowledge of academic research.”

So, you have never studied languages / linguistics / communication at university. Well, I have - both on a Master’s and PhD level. In addition, I’ve taught L2s for ca. 20 years before switching completely to IT a few years ago (having studied computer science as well).

Be that as it may, what you wrote is essentially based on what others have said (many serious linguists, Pinker, highly respected polyglots, countless people) because your own academic knowledge in those areas seems to be at the same lay level as your experience as a language learner.

"You are clearly not aware that Chomsky is a highly devisive figure. "

Sorry, sir. You are not able to decide “clearly” what I’m aware of or not when it comes to Chomsky’s theory / influence in particular and SLA / linguistics / communication research in general.

I get the impression that you are not in a position to teach me “anything (new)” about how language processing/learning works (both in theory and in practice) that I don’t already know, so we can end our little discussion here.

Sigh… Well, since Chomsky has come up: his “Universal Grammar” idea is simply nonsense whose goalposts have been shifted to the point of absurdity.

If Chomsky had come out and said that his grand linguistic theory is that human beings have the ability to learn and produce language, he would have been laughed out of the room. And yet, that is the precise claim “Universal Grammar” has been whittled down to over the decades. The original claim, meanwhile, was hardly more than a linguistic reformulation of Platonism.

As often with nonsensical scientific concepts, there have been some very useful descriptive and computational concepts and tools built on top of the theory to make it work. Many of these tools remain valid and useful to describe languages and grammars despite their origin in nonsense science – but that doesn’t make the original idea any more valid than the precision and sophistication of epicycles makes the geocentric universe correct.

I wish you would stick to substance instead of ad hominem attacks.

That many of Chomsky’s views are being shown to be false is a fact, not an opinion. Large Language Models are proving that a highly generalised neural network can demonstrate a sophisticated use of language, thus disproving Chomsky’s assertions that a language specific architecture is required. That countless people have learned a second language to native level as an adult disproves his assertion that a so called Language Acquisition Device exists, and shuts down before adulthood. There is plenty of other evidence that argues against Chomsky’s innate language theory. For example, some South American indian languages lack certain grammatical features that are supposed to be present in all languages, which supports the view that the brain does not have a dedicated language architecture, and instead language evolves as part of culture. I recommend The Unfolding of Language by Guy Deutscher if you haven’t already read it. It is a fascinating book. Stephen Pinker is a highly respected linguist, so I am happy to accept his view that Chomsky has had too much influence in linguistics.

Having a PhD in a field does not make one an expert in that field. Thus you are no more an expert in linguistics than I am an expert in physics. What it does mean is that we have each studied in detail one very small area of our subject field. We might even have been world leaders in that small area. But I would never claim to be an expert in physics, far from it. I only have a superficial knowledge of other areas of physics. Indeed I once worked alongside a PhD in image processing. One day she asked why her image spectra had a specific form. I knew that it was due to posterisation as I had been interested in photography for decades. When she left, I took over the software she worked on, which performed processing of images from a document reader. I found and fixed countless serious bugs after the Swedish government sent machines back. A PhD does not equate to broad expertise.

Yes I have studied linguistics, having read very widely over many years. If we are to take your view that only those with a PhD know about linguistics, then we must also conclude that countless well known polyglots know nothing about linguistics. We must also conclude that Elon Musk knows nothing about rocket science, and Bill Gates knows nothing about computer science. Clearly that would be absurd.

I get the impression that you are not in a position to teach me “anything (new)” about how language processing/learning works (both in theory and in practice) that I don’t already know, so we can end our little discussion here.

What an astonishing remark,

Generative grammar is indeed clever nonsense.

For many years Chomsky continued to support the Pol Pot regime in Cambodia despite compelling evidence that it was engaging in genocide. I won’t mention his other political ideas out of respect for the forum as it is too off topic.

@PeterBormann I don’t disagree that it is much more efficient to ‘activate’ your ‘passive’ vocabulary through speaking, writing, and use operations instead of banging your head against the wall through ‘input only’ trying to achieve a similar result. In the end, it’ll never work out. As a German, I’m sure you’ve visited Austria and Switzerland and are well aware of those in the mountains and other places, who understand High German perfectly, but fail to speak it well and instead speak dialect, despite knowing very well you want them to speak High German.

The issue with Jeff Brown’s comprehensible input spoken fluency route is that it either not super efficient (doing language exchanges, like he did) or it is quite expensive (hiring a tutor). Though, if you have the money, I think it would be the fastest way to spoken fluency, having a private tutor, who knows your vocabulary level, interests, etc. and feeds you accordingly. I’ve mentioned to you that I was personally doing it for Russian for a little while (if you can get a hold of the picture cards from board games like Dixit, they are great to start off with, then move on to coloured comic books, which are far superior to children’s books and black and white manga).

As for @steve’s experience with Arabic, I think there are several things which slowed him down, compared to Jeff Brown’s one year experience:

- Juggling two other languages, which he admitted was an overly enthusastic folly

- Switching from standard Arabic to Lebanese Arabic, which is understandable

- His choice of study materials potentially could have been a bit too highly focused on low-frequency vocabulary, found in books, and podcasts dedicated to educated native speakers about niche topics. If this was the case, it’s definitely a handicap

- He has spent a lot of time using Sentence View, which is a very slow way to study. He furthermore does the little quiz games, which personally isn’t my cup of tea. As you mentioned, this is where grammar study helps, as he’s also come to the conclusion and started doing more of.

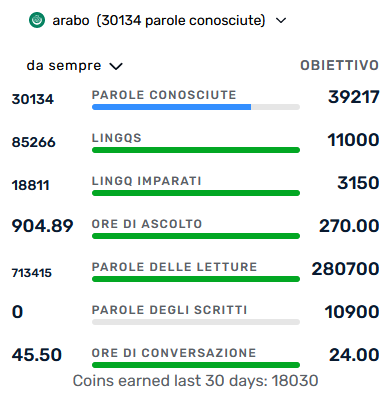

Here are @steve’s Arabic stats:

- He has 85k lingQs. If he doesn’t have auto-lingQ on, this takes a lot of time! Even at one second per lingQ creation that’s over 23 hours of complete time waste. If he wrote the occasional definition or even peaked at a dictionary, you would be looking at much more time wasted.

- You can also see that he hasn’t actually read many words at all. He’s only recorded 713k words read on LingQ over the last six years. I’ve recorded this many words read in Russian since starting it about six months ago (I mainly thank Language Reactor for my increase in words read). With these numbers, no wonder he still struggles with the Arabic writing system. Personally, I still feel quite far off being able to read Russian at any speed faster than snail’s pace. For me, I have to see the Russian word, pronunce it out loud, and only from the sound do I understand the meaning. Even though we are discussing vocabulary here, you need huge amounts of reading to drill in these different alphabets, etc. for the words go straight from their written form to meaning instead of requiring the intermediary of pronunciation. Or so is my personal experience anyways.

how exactly do you combat this ? I have that problem as well. I need to check every blue word against a dictionary as I have passed the threshold were unknown words have good definitions long ago. Most of the time I only have one definition available that was autogenerated by lingq and is wrong in 50% of the cases. I check these words in globse and copy the best definition. This is of course a time intensive process. AutoLingqing wouldn’t help me here because then I would end up with roughly half of my lingqs beeing wrong.

When you are encountering words with no Community Definitions, you are either trail-blazing a language or are advanced in a language and are encountering very low-frequency words. Both cases are not great as a learner. It’s language dependent, but if your Google Translate / DeepL translations are often wrong (usually due to one word having multiple definitions), then you only have a few options:

- Simply not care, turn on auto-lingQ, and resign yourself to wrong definitions often

- Manually write out your definitions (takes a lot of time and effort)

- Search the Internet and find a dictionary to import or make one yourself (can be time consuming to find one and format it accordingly). Note: all definitions you write are shared, so ideally this dictionary is public domain or Creative Commons

Personally, I’ve written out a lot of definitions myself and wasted a lot of time. I’ve also tried looking for a dictionary to import without much success. These days I’ve moved the majority of my studies away from LingQ. Only occasionally do I study on LingQ and mainly re-study content to avoid the faff of making lingQs. Having a sentence translation will in most cases usually provide you with the definition you are looking for (if you keep the number of unknown words in a sentence to one or two, otherwise it gets too challenging to guess which definition belongs to which unknown word). Unfortunately, on LingQ you can’t really use the sentence translation (either in Sentence View or Show Translation in Page View) as your sole means of understanding the word, because if you don’t make a lingQ to turn the blue word yellow it gets sent to Known, if you accidentally press ‘Complete lesson’ (hopefully this will change soon!). So if you do it this way, you don’t care about highlighting and stats. Furthermore, I find the text size and the layout of the Show Translation in Page View to not be easy to use. They really need to change the size and layout of it.

TL;DR Personally, I’ve moved most of my studies to Language Reactor, where I get the vast majority (90%+) of definitions from glancing down at the English translation (ideally human written, but AI generated is usually alright too for the languages I study). The upside is it’s also far less clicks, so you can cover much more content.

Edit: There is also @roosterburton’s Reader, which uses the lesson’s auto-scrolling Lyrics Mode, but adds the translations under each sentence, highlighting, etc., which should really be available on LingQ to begin with. I’ve only tried his free version, which is less than ideal. The premium version allows you to change the colours, font sizes, etc. which is what you really need to be able to set up a layout which is easy on the eyes. The most important part of this is that you need short paragraphs to be able to get the benefit of the translations to find the definition of the unknown word. If the sentences are long and complex, like in a book, it’s just too challenging to scan the equally complex and long sentence translation to find the missing word before it switches to the next sentence. You really need short sentences, similar to subtitles, which often means studying subtitles themselves and if you’re doing that you might as well do it directly on YouTube with another tool.

Thus, it’s better to start with “use” operations (speaking, chatting, writing, L1->L2 translations) much earlier.

This is an old thread, but wondering if @PeterBormann or others can elaborate on examples of “Use Operations” exercises that he listed between the parentheses. I think this activity is what I’m doing now with my language learning… because I thought that by only reading my learning would become less effective as my lazy brain would inevitably slip from active to passive mode as I consume content. So, I’ve been supplementing with Use Operations, but I want to make sure I’m doing it effectively.

All opinions are appreciated and welcome; no need to debate which are right or wrong ![]() thanks!

thanks!

Hi mctog,

Unfortunately, I’m involved in a huge IT project with a crazy deadline

at the moment. Therefore, I don’t have time for SLA / forum discussions…

Maybe others can chime in here.

Or better yet, you could open a new thread in the Open Forum with exactly this question so that you increase your chance of getting more opinions / ideas.

I wish you luck / success!

can elaborate on examples of “Use Operations” exercises

‘Use operations’ / ‘production’ are the exercises you do which makes you use/produce the language. This means when you are speaking, writing, or translating from your native language to the target language (i.e. L1 → L2). Exact examples include: having a conversation with someone, speaking to yourself, writing a journal, having a conversation to ChatGPT, writing reviews on Google / Apple Maps, getting an article in your native language and translating it into your target language.

“word dense” yes! More words per minute.